Frequently Asked Questions

Get answers to common questions about using and troubleshooting Pieces for Developers.

What is Pieces OS? How does it work?

Pieces OS is the background service that enables Pieces to work locally and handles communications with our various integrations. Without Pieces OS, Pieces for Developers would not be able to save your snippets, generate responses to your questions, or interact with any of installed plugins.

Pieces OS also enables our cloud-enabled features like link sharing.

Head over to our installation page to get started.

How do I check my Pieces versions and update the app?

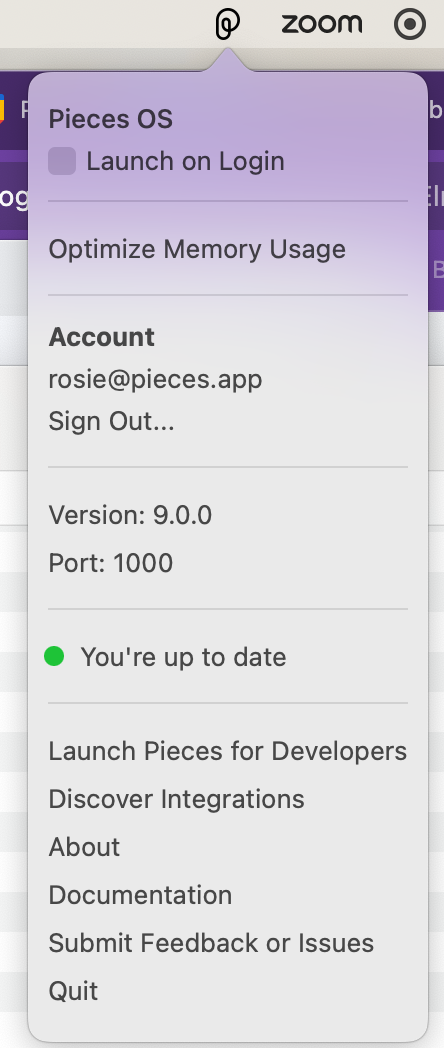

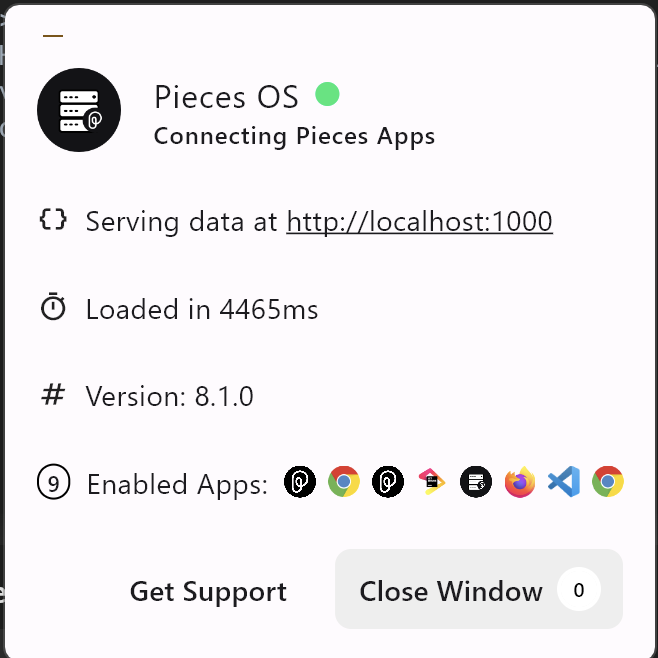

Pieces OS

- macOS

- Windows

- Linux

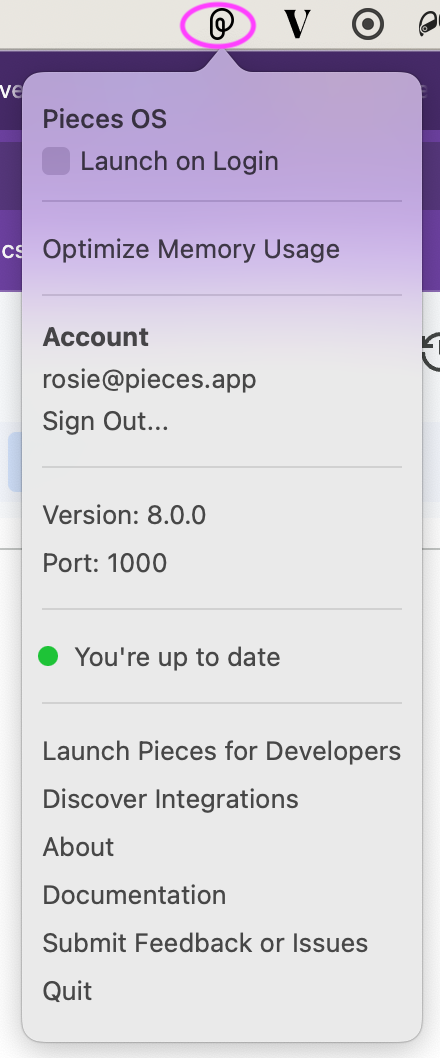

Pieces OS: Click the P icon in your menu bar and view your update status in the dropdown

Pieces OS: Click the PiecesOS icon in your task bar and view in the popup

If you installed via the Pieces Suite Installer, you don't have to do anything to update the applications. Pieces Suite Installer provides the option to manually update the applications, but you never have to launch it again to get updates. You don't even have to update from within the application itself, you can simply ignore any prompts that pop up within the applications and they will be updated for you automatically in the background the next time you restart your computer or the applications.

If you installed via the standalone EXE setup, that uses a different update mechanism that only checks for updates once a day or on application launch. You will receive a prompt where you can install or delay the update.

If you have installed via the standalone EXE, I would recommend switching over to the Pieces Suite Installer, as you will never have to click anything to get updates. Be sure to first uninstall Pieces OS and the Pieces for Developers desktop app to prevent any conflicts. Your user-generated data will not be affected.

To get information including the version of the Pieces OS and Pieces for Developers desktop app snap package, you can run:

snap info pieces-os

snap info pieces-for-developers

This will also show any available updates.

To update Pieces OS and the desktop app, you can run:

sudo snap refresh

Pieces Desktop App

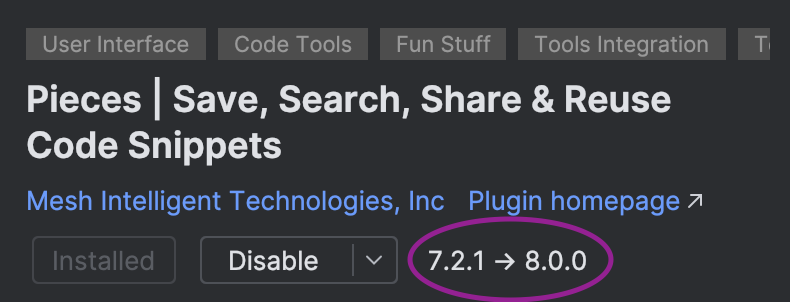

Pieces for VS Code

Select Extensions > Search for Pieces for VS Code > Select the extension > View the version next to the extension name

Pieces for JetBrains

Select Settings > Plugins > Search for Pieces for Developers > View the version

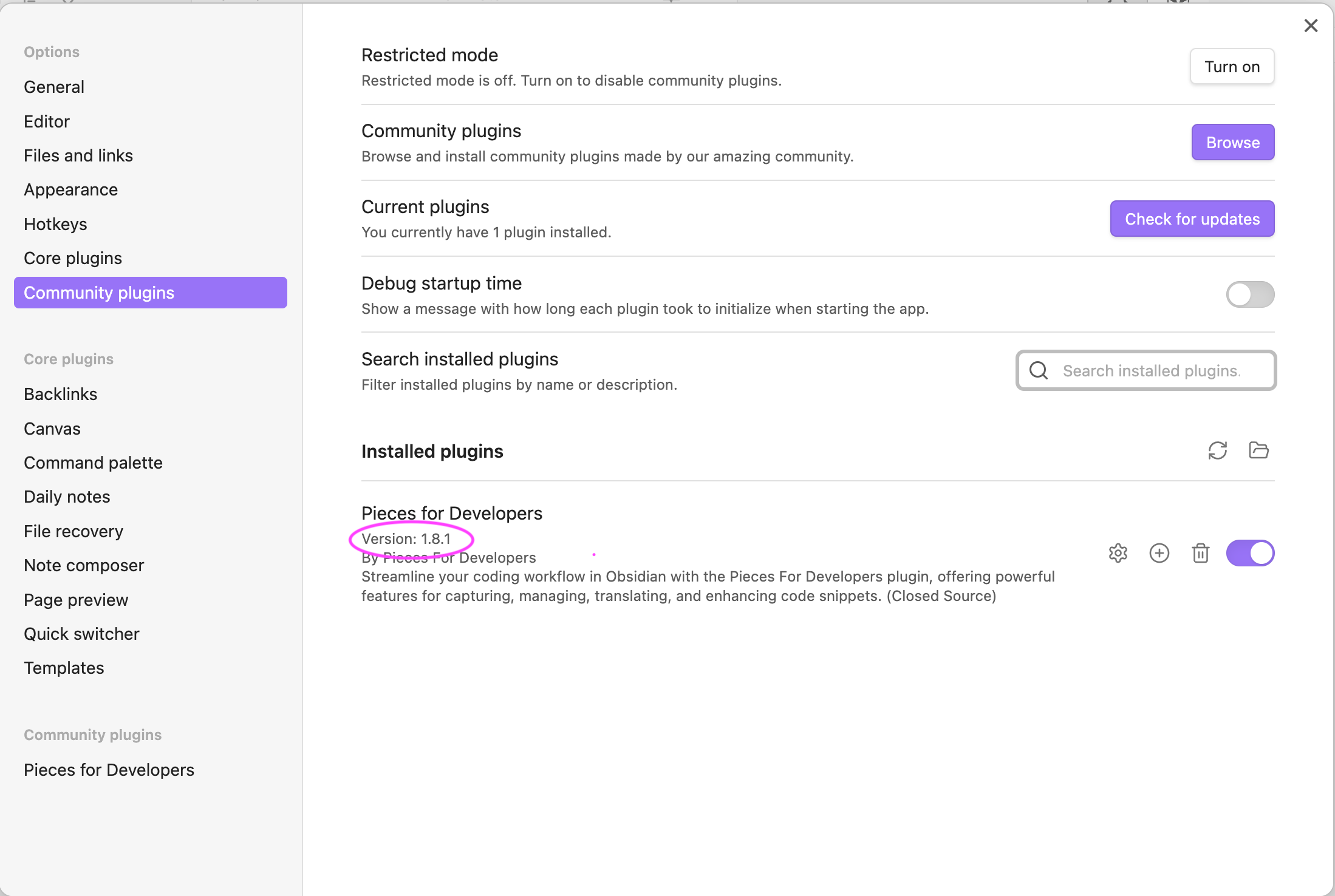

Pieces for Obsidian

Select Settings > Community Plugins > View the version under Pieces for Developers

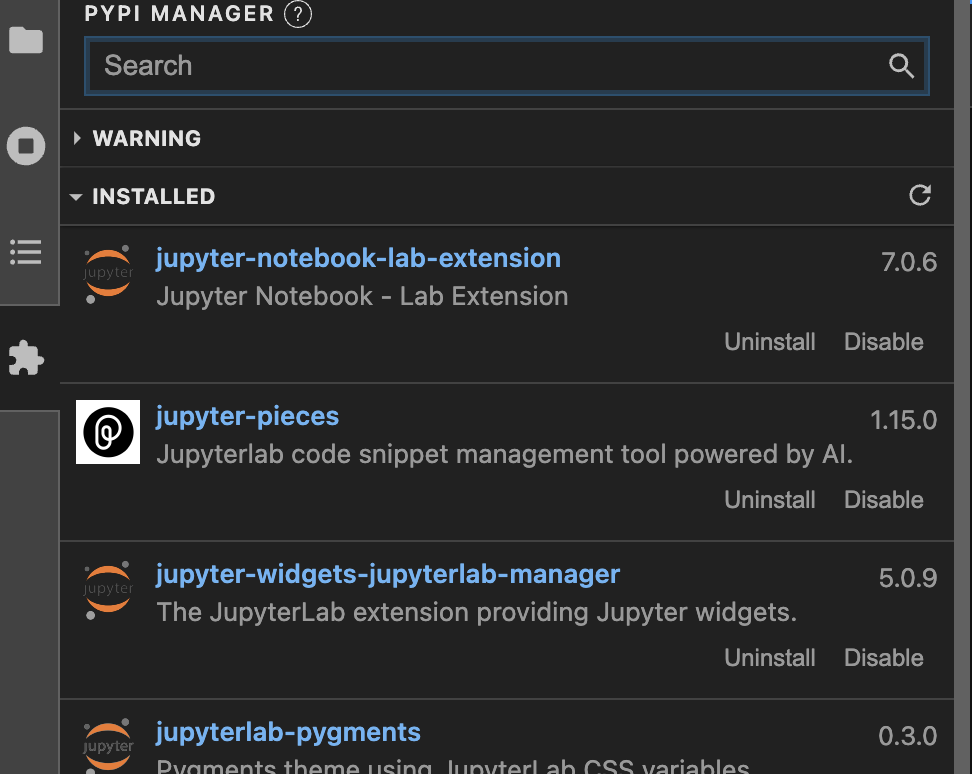

Pieces for JupyterLab

Select Extension Manager > View the version next to jupyter-pieces

If you are on unix, you can also run the following command to check the version of the Pieces for Developers desktop app:

pip list | grep jupyter-pieces

Can’t connect to Pieces OS

Please note that we have found that users who have some sort of proxy set up on all their machines may experience a connection failure with Pieces. We have only seen this on Windows so far, but it could also be the case for macOS or Linux. We expect this to be fixed with PiecesOS Release 8.1.0.

- macOS

- Windows

- Linux

Follow these steps to debug:

-

Restart your computer and launch the application again.

-

Check the version of macOS you are running: we support macOS 12.0 Monterey or higher

-

Ensure PiecesOS is running: you should see the Pieces icon in your menu bar

-

Check for updates using that dropdown and update if possible.

-

Ensure that the desktop application or whichever plugin you are experiencing this with (e.g. VS Code, Chrome, Teams, etc.) is up-to-date.

If you’re still having problems, email support@pieces.app and include the following:

- Your macOS version, PiecesOS version, Pieces Desktop App version

- Zip up any files you see at the following paths and attach them:

/Users/[username]/Library/com.pieces.os/Support/production/logs/Users/[username]/Library/com.pieces.pfd/production/logs

- Ping the PiecesOS endpoint by clicking the following link. If you get a response, please send that response in the email as well.

http://localhost:1000/applications

Follow these steps to debug:

- Restart your computer and launch the application again.

- Check the version of Windows you are running and make sure you are up-to-date.

- Ensure PiecesOS is running: you should see the PiecesOS icon in your system tray

- Do you have any antivirus or firewall-related software on your machine? You can attempt to whitelist PiecesOS and the Pieces Desktop app with your antivirus software; otherwise, it may continue to block the application.

- Is your machine part of a corporate environment? You may need to try a different installation method, including trying to get it through the Windows store, or you can talk to your system administrator to see if they can assist you with allowing it to pass.

If you’re still having problems, send an email to support@pieces.app and include the following:

- Your Windows version, PiecesOS version, Pieces Desktop App version

- Zip up any files you see at the following paths and attach them:

- Documents > com.pieces.os

- Documents > com.pieces.pfd

- Ping the PiecesOS endpoint by clicking the following link. If you get a response, please send that response in the email as well.

http://localhost:1000/applications

Follow these steps to debug:

- Check the version of Ubuntu you are running. We currently support 22.04 and above.

- Please submit a support ticket and include the following so we can diagnose the issue: In your terminal, print out a debugging log of Pieces OS by using the following command:

pieces-os --debug

Do the same with Pieces for Developers:

pieces-for-developers --debug

Common Installation Issues

- macOS

- Windows

- Linux

- Check your MacOS version: you need to be on macOS 12.0 Monterey or higher in order to run the applications.

- There are two installation packages available for Mac: one for an Intel machine and one for Apple Silicon. Please make sure you have downloaded the correct one based on your machine. You can see what chip your machine has by viewing “About This Mac.”

- If you have the correct package and it failed the first time, please restart your machine and try again.

- If you receive any error messages when attempting to install after restarting, please send over a screenshot using this support form and we can take a look.

- Check for a Windows update and restart your computer.

- Have you tried to install using both the Pieces Suite installer and an alternative method listed on our installation page? If so, please remove everything Pieces-related on your machine and retry using the Pieces Suite installer.

- If you are having an issue with App Installer, you may want to check the Microsoft store for any updates to App Installer and make sure that the Microsoft Store is up-to-date in general.

- If you receive any error messages when attempting to install after restarting, please send over a screenshot using this support form and we can take a look.

- Please note that we only officially support Ubuntu and Ubuntu-based distros; that doesn’t mean others won’t work, but we can’t guarantee support for them.

- Please make sure that Snapd is up to date. Running sudo snap refresh should get you up-to-date on the most recent snap version. Then try installing Pieces OS again.

- You may be able to try switching from x11 to Wayland and vice versa. If you have an Nvidia Graphics card, you can try switching to Hybrid Graphics settings and try to use third-party drivers. If you are still having issues, you may need to consult online forums in order to get Snap working again on your system. If you receive any error messages when attempting to install after restarting, please send over a screenshot using this support form and we can take a look.

Local Model Crashing

Troubleshooting Local LLMs Issues

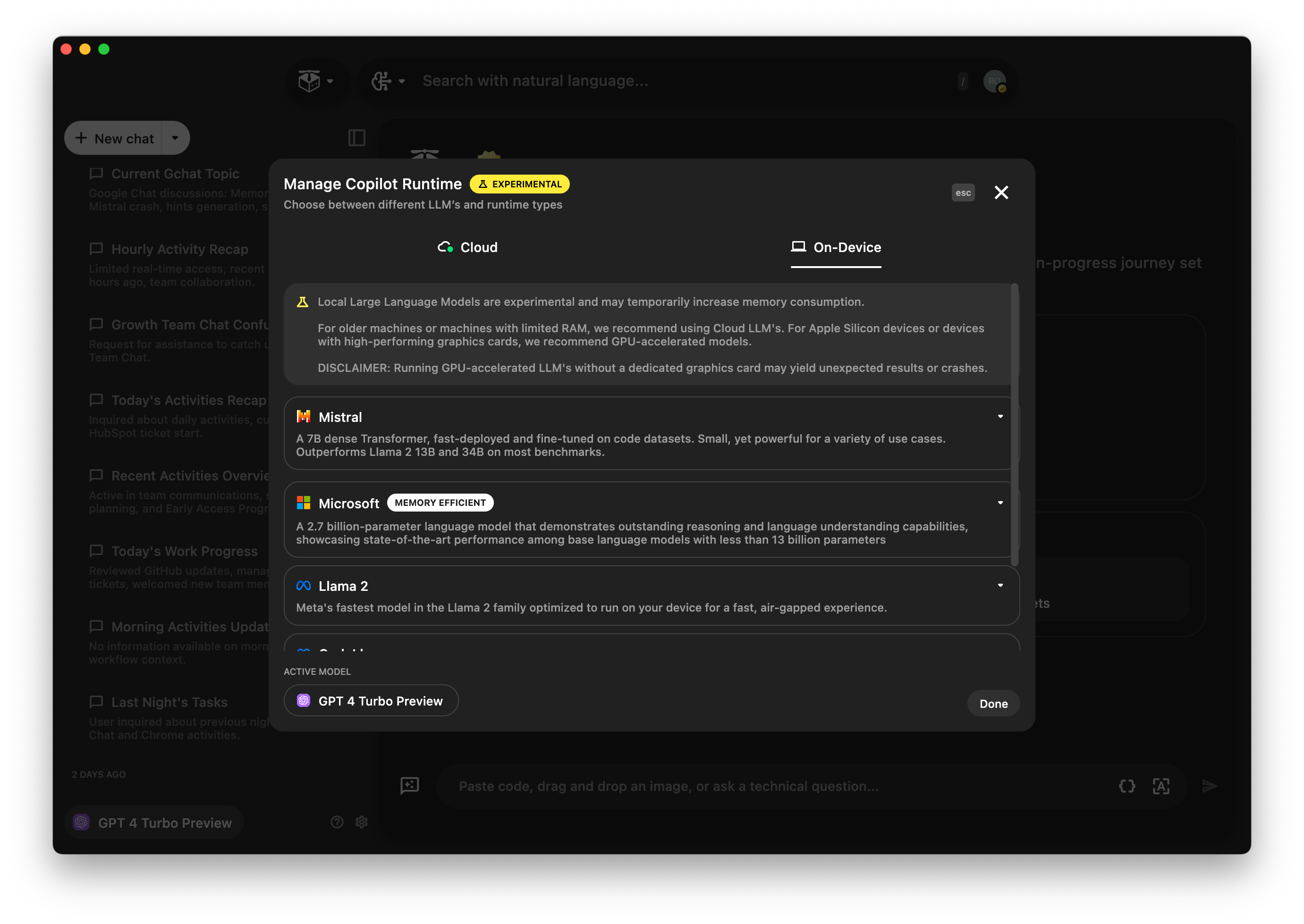

If you are receiving the error message I’m sorry, something went wrong with processing... or the Pieces application crashes when you are using an on-device model, you may be trying to run a local model that requires more resources than your machine has available, or you’ve selected a GPU model when you don’t have a dedicated GPU.

Some things to consider:

- Older machines can struggle in general with local models; you'll have the best luck with machines from 2021 or newer.

- A machine with a dedicated GPU with more than 6/7GB of available GPU-RAM (VRAM) is optimal to run any of the GPU versions of the local models.

- If your machine does not have a dedicated GPU, you will likely have to use a CPU version of the model, which may not offer the same performance level.

Unfortunately, if you have an older machine or one with very low resources, you may have to use a cloud model in order to use the copilot.

Another common issue on Linux and Windows is a corrupted or outdated Vulkan API, which we use to communicate with your GPU. Vulkan should be bundled with your AMD or NVIDIA drivers, and you can check its health by executing the command vulkaninfo in your terminal and scanning the resulting logs for errors or warnings. This could indicate that either your GPU drivers need updating or there is an issue with the API itself. Please contact the Pieces support team if you believe your Vulkan installation is broken.

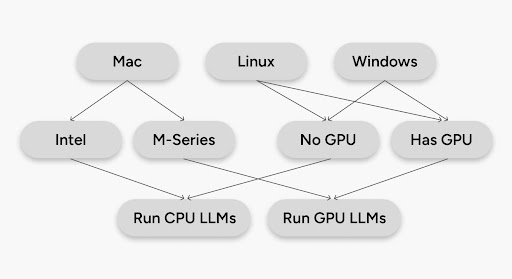

To understand how to check your machine specs and select the best model for your machine, continue on to the next section. For detailed information on the difference between GPU and CPU and the best hardware for running local models, please see our blog post about this topic.

Checking Your Machine Specs

In order to select the best local model for you, you should first check your machine specifications.

- macOS

- Windows

- Linux

- Click on the Apple logo in the top-left corner of the screen.

- Select "About This Mac".

- In the window that opens, you can see if your machine runs an Intel chip or an Apple Silicon M-series chip.

- Right-click on the taskbar and select

Task Manager. - In the Task Manager window, go to the

Performancetab. - Here you can see your CPU and GPU details. Click on "GPU" to see GPU information.

- To see detailed GPU information including VRAM, click on

GPU 0or your GPU's name.

- Open a terminal window.

- For CPU information, you can use the

lscpucommand. - For GPU information, you can use commands like

lspci | grep -i vgato list GPU devices.

If you don’t have this installed, you may need to consult online forums for other options for your Linux machine.

GPU Requirements for Certain Models

If you have a GPU, you can review the specifications for several of our on-device models below to see if you have enough RAM for them.

- Llama2 7B, a model trained by Meta AI optimized for completing general tasks. Requires a minimum of 5.6GB RAM for the CPU model and 5.6GB of VRAM for the GPU-accelerated model.

- Mistral 7B, a dense Transformer, fast-deployed and fine-tuned on code datasets. Small, yet powerful for a variety of use cases. Requires a minimum of 6GB RAM for the CPU model and 6GB of VRAM for the GPU-accelerated model.

- Phi-2 2.7B, a small language model that demonstrates outstanding reasoning and language understanding capabilities. Requires a minimum of 3.1GB RAM for the CPU model and 3.1GB of VRAM for the GPU-accelerated model.

Note that as we continue supporting larger LLLMs like 13B models from Llama 2, these local LLM hardware requirements will change.

Deciding Which Local Model To Use

You will see that all of our local models have a CPU and GPU option. Now that you know a little more about your machine and GPU vs CPU, you can use the chart below to decide whether to use a GPU or a CPU version of a model.

Once you’ve decided on a GPU or CPU version and you understand which models your machine is capable of running, the choice between which brand model is more or less a matter of opinion, as models tend to excel in different types of knowledge (general QA, science, math, coding, etc.). We at Pieces like to use a variety of models, to see the differences between answers we get based on the model’s knowledge base and training, and for different purposes - for example, the Pieces team member writing this article likes Phi-2 for its lightweight speed, but Mistral for its quality of answers. We’ve included some links to model evaluations below, but there is new information being released almost daily at this time.

- Mistral announcing their new 7B model

- Microsoft announcing their new Phi-2 model

- HuggingFace Chatbot Arena Leaderboard

Please note that these on-device models are very experimental, and we can’t necessarily guarantee success with them. We would love if you would share your experience, good or bad, in this GitHub discussion so that we have better data for recommendations.

Non-Ubuntu Distros - Linux

Pieces for Developers currently supports Ubuntu 22.04 and up and Ubuntu-based distributions. Other OSs may work, but we can't guarantee the quality of the experience.

Please join this discussion on GitHub to add your opinion about what distributions we should work on next.

Copilot Context Issues

If you are getting poor results from adding context, there are some rules of thumb to consider:

- Scope your context as much as possible - adding an entire repo and asking a specific question won’t result in as good of an answer as adding a single file.

- Use good prompt engineering: avoid using only “this” or “that” as much as possible, and be specific with your questions.

- e.g.,

What does this do?vsWhat does this code snippet do?

- e.g.,

- Try different models as they all have their strengths and weaknesses.

Please note that adding context to your copilot is something we are constantly iterating on and improving, so it should be getting better all the time.

Resetting the Copilot Runtime

If you encounter issues with the Copilot or simply wish to restart your session, there are several methods to reset the active runtime. This can be particularly useful if the Copilot behaves unexpectedly or if you want to clear the current session's context and start fresh.

This can be particularly useful if the Copilot behaves unexpectedly, freezes, or if you want to clear the current session's context and start fresh.

Methods to Reset the Active Runtime

- Double-click the "Stop" Button: Located to the right of the Copilot input box, a quick double-click on the "Stop" button will initiate a reset of the current runtime session.

- Copilot Settings Dialog: Access the Copilot settings by clicking on the settings icon or navigating through the Copilot menu. Within the settings dialog, select "Hard Reset Active Runtime" to restart the session.

- Copilot LLM Runtime Config Dialog: For more detailed runtime configurations, open the LLM Runtime Config dialog. Here, you can select "Hard Reset Active Runtime" to ensure a fresh start for your Copilot session.

Expected Response

After performing any of the above actions, you should receive a confirmation that the active runtime has been reset. This ensures that you are starting from a clean slate, allowing the Copilot to perform optimally in assisting with your coding tasks.

Account Issues

Can’t Link GitHub and Gmail Accounts

This usually happens when multiple accounts are created accidentally. We will need to work with you to fix this! Please submit a support ticket and we will help you out.

Any Other Account-Related Issues

We will need to work with you to fix this. Please submit a support ticket and we will help you out.

Remove Pieces Completely

Please note that removing your Pieces databases (step 2) will delete all of your data, including code snippets, copilot conversations, and downloaded local LLMs.

- macOS

- Windows

- Linux

- Quit Pieces and Pieces OS, then right-click on the Pieces apps (Pieces and Pieces OS) within your Applications folder and move to trash.

- Remove your local databases by deleting the

com.pieces.osandcom.pieces.pfdfolders within your Library folder. - If you would like your account to be removed entirely, please submit a support ticket with the email address you would like to be removed.

- Quit Pieces and PiecesOS, then right-click on the Pieces apps within your "Apps" on Windows.

- Remove your local databases by deleting the

com.pieces.osandcom.pieces.pfdfolders within your Documents folder. - If you would like your account to be removed entirely, please submit a support ticket with the email address you would like to be removed.

To uninstall you can run:

sudo snap remove pieces-os

sudo snap remove pieces-for-developers

Remove your local databases by deleting the com.pieces.os and com.pieces.pfd folders within your Documents folder.

If you would like your account to be removed entirely, please submit a support ticket with the email address you would like to be removed.

Custom Local LLMs

This is a frequent request, and we think it's a great idea! However, it would be a significant infrastructure change so it won't be something we'll be able to get to very soon. We have added it to our backlog and will be addressing it as soon as we can.

GitHub Integration

How do I get started with the Pieces + GitHub Integration?

- Only accounts with matching primary email addresses will be linked.

- If the accounts do not match, a new, separate account will be created.

1. Link your Account

Settings -> Connect Accounts -> Connect to Google

Verify your connection to the Pieces Cloud before moving forward

2. Authorize with GitHub

Settings -> Connect Accounts -> Connect to GitHub

After selecting the GitHub icon, a "Link your Account" menu will come into focus.

Verify your connection through the settings section

Manage Cloud Integrations.

Check-in

At this point, you should:

- Be logged in to Pieces with a personal email and/or Google account

- Be connected to your Pieces Cloud

- Be authorized via GitHub connection

How do I Export a Gist to GitHub?

- Generate a shareable link in the Pieces desktop app

- Inside this menu, select the option "Share as GitHub Gist"

- You will see a GitHub Menu

- You can give your snippet a custom Name & Description (optional)

- Click "Create" to share to GitHub

Note: Gists can be set to private in the Share a Gist menu, though they are public by default.

How do I Import a Gist to the Pieces for Developers Desktop App?

Prerequisites to Import from GitHub:

- Connect to Pieces OS

- Sign in to Pieces via your personal email or Google account

- Authenticate with GitHub

- Your sign in account must be linked with GitHub account. Mismatched accounts will fail when attempting to Import from GitHub.

To Perform a Gist Import

- Choose “Discover” above your Snippet

- Choose “Select Gists”

- Make sure Pieces account is connected to your GitHub account

- Browse your Gists in the Desktop App and select the ones you'd like to import

- Done!

If I delete a Gist in GitHub, will the snippet also be deleted from Pieces?

Deleted Gists will not be deleted in Pieces for Developers.

I deleted a Gist from GitHub. Can I recover the original Gist?

Yes, a Gist can be recovered if you saved the original snippet in Pieces.

To Redo a Gist:

- Locate the desired snippet in the Pieces desktop app

- Navigate to the shareable link within the snippet's information view

- Click the GitHub icon to view the "Share as GitHub Gist" menu This will repopulate your GitHub Gist list with the selected snippet

How do I toggle Light & Dark Mode?

To toggle between Light Mode and Dark Mode, use the Keyboard Shortcut:

| macOS | Windows |

|---|---|

| ⌘ + Shift + T | CTRL + Shift + T |

Or navigate to the Pieces User Settings -> Customize -> Toggle Light Mode.

How do I convert a saved image to code or text?

In order to use Pieces OCR, you can drop a saved image into a copilot conversation. It will convert the image into a snippet that you can save to Pieces and describe the snippet for you, in addition to setting that snippet as context so you can have a customized conversation.

You can also drop an image into the saved materials view and it will create a new snippet for you. You can then select the “View as Code” button, allowing you to copy, modify, and share the extracted code as needed.

It's important to note that the accuracy of the extracted code or text may vary depending on the quality of the image and the complexity of the code.

What languages does Pieces for Developers Support?

Pieces supports nearly 40 programming languages, and we are continually adding more to our list. If you have a specific language you would like to see added, please let us know by submitting a feature request.

Supported Languages:

- Batchfile

- C

- Clojure

- C#

- C++

- CoffeeScript

- CSS

- Dart

- Erlang

- Emacs Lisp

- Elixir

- Go

- Groovy

- Haskell

- HTML

- Java

- JavaScript

- JSON

- Kotlin

- Lua

- Markdown

- Matlab

- Objective-C

- Perl

- PHP

- PowerShell

- Python

- R

- Ruby

- Rust

- Scala

- Shell

- SQL

- Swift

- TeX

- Text

- TOML

- TypeScript

- XML

- YAML

How do I leverage the Pieces Actions Menu to maximize user flow?

The Actions Menu offers a wide range of valuable features that allow users to more efficiently manage and edit their content. By streamlining these processes and minimizing interruptions to the user's workflow, this feature helps to increase productivity and optimize the user's experience.

These options are accessible through a keyboard shortcut, ensuring that users can quickly navigate the menu without the need to break their focus.

Keyboard Shortcut

| macOS | Windows |

|---|---|

| ⌘ + Return | CTRL + Enter |

Where do the suggested searches in the Global Search view come from?

The suggested searches in Global Search view are made up of existing snippet tags from the user's personal repository. When a suggested search is clicked, users are directed to a list view of snippet names, titles, classifications, and commonly shared related tags and links. This powerful feature streamlines the search experience in Pieces for Developers and allows users to discover relevant code snippets with ease.

Why do I see an error for JCEF inside JetBrains when I boot?

If you also have the JCEF is not supported in this env or failed to initialize error, here is how you can fix it:

- Go to Help -> Find action

- Search and select "Choose Boot Java Runtime for the IDE"

- In that popup, select a JetBrains runtime that has JCEF

- Then restart the IDE and the error is gone.

Read more on the JCEF Errors and JCEF - Java Chromium Embedded Framework

How can I discover useful snippets within the Pieces for Developers Desktop App?

Try using Snippet Discovery to discover snippets from an existing project or file.

For Best Results: We recommend that users add 5-10 project-specific snippets to their repository before performing Snippet Discovery on a local project.

Why?

Pieces trains Snippet Discovery on the common themes within a project to better extract what you need, when you need it.

How does "Sort by Suggested" differ from "Sort by Last Added"?

The Pieces for Developers desktop app provides users with a few options to sort their snippets. In addition to alphabetical sort and sorting by language, you can sort by suggested or by last added.

- Sort by Suggested

- This will resurface previously saved snippets and reorder your list or gallery to put relevant snippets closer to the beginning.

- It's useful for quickly accessing relevant snippets from your current workflow.

- Sort by Last Added

- This view displays your snippets in the order in which they were saved. New snippets are at the beginning.

- It's useful for users that want to stay up-to-date with their latest saves.

Can I edit a snippet in the Pieces for Developers Desktop App?

Yes! Users can easily modify their selected snippet by clicking the edit button within the snippet's text field.

Keyboard Shortcut

| macOS | Windows |

|---|---|

| ⌘ + E | CTRL + E |

To Cancel Edits, simply press the Escape key.

To Save Edits, hit the Save icon in the top right corner of the edit window. After making an edit, a success notification will verify the changes have been saved.

Overall, this feature provides a more efficient and streamlined way for developers to make quick edits to their code snippets.

Can I modify my Confirmation Notification Settings?

Yes. Users can minimize noise levels by toggling off confirmation settings for various actions in the Pieces for Developers Desktop App.

Navigate to Settings -> Customize -> Confirmation Settings

You can toggle the following confirmations:

- Created snippet

- Created snippet tag(s)

- Created related link(s)

- Added related person(s)

- Detected sensitive(s)

Additional settings that can be toggled:

- Shareable link updates

- Sensitive alerts when generating a shareable link

What are the key benefits of the Pieces for GitHub Integration?

Enhanced Collaboration

By automatically capturing relevant Git history, including collaborator profiles, commit messages, and related pull request and branch links, Pieces enables users to search their snippets via collaborator profiles, making it easier than ever to know who to reach out to or add to a pull request, ultimately enhancing collaboration.

Fully Configurable

The context captured by the Git Context feature is fully editable, searchable, and shareable, giving users complete control over their snippets and making it easy to find and reuse code. Additionally, all the context information is configurable in the Pieces Settings, allowing users to customize their experience to best fit their needs.

Context Awareness

Pieces for Developers makes it easy for developers to save from their IDE and allow the Pieces GitHub integration to automatically include a list of people who made commits to the code, enabling them to understand who wrote and interacted with the code. This feature is particularly useful for teams who want to collaborate more effectively.

I'm Disconnected from Pieces OS. Now what?

If you're unable to connect to Pieces OS:

- Navigate to your applications folder from the Finder window

- Locate Pieces OS & open the application

If you merely closed the app on accident, the steps above should resolve your connection issue.

Still not Connected?

You may need to install Pieces OS.

Head over to https://code.pieces.app/install to get started.

Still not Connected?

If you're still disconnected after installing Pieces OS, check your OS compatibility. If that fails, please contact us at support@pieces.app.

What are Collections?

Collections allow users to save curated groups of code snippets from the cloud-hosted Pieces Collections site. This makes it easy for developers to reference similar code snippets together and quickly access them when needed.

Where can I find Collections?

Keyboard Shortcut:

| macOS | Windows |

|---|---|

| ⌘ + Shift + C | CTRL + Shift + C |

You can also add collections from the "Add a Piece" menu.

It's important to note that the accuracy of the extracted code or text may vary depending on the quality of the image and the complexity of the code.

What is Activity View?

In Activity View, developers can quickly and easily navigate back to their most recent work, making it an essential feature for anyone who wants to stay organized and productive.

What's inside?

In Activity View, you can see when you add or adjust context for your snippets, including:

- Related links

- Tags

- Descriptions

- Snippet names

- Snippet languages

- Related people

- Created snippets

- Snippet titles

- Snippet references

Keyboard Shortcut:

| macOS | Windows |

|---|---|

| ⌘ + Shift + A | CTRL + Shift + A |

Is this a manual process?

No. This feature updates in real time as users work, providing a comprehensive view of their recent activity, including recently opened snippets and files, edited code, and more.

Still can’t find your answer?

Check out our support page for more information and personalized assistance!